| A Proposal of Joystick-type Input System that is Easy to Operate for Physical Disability Persons |

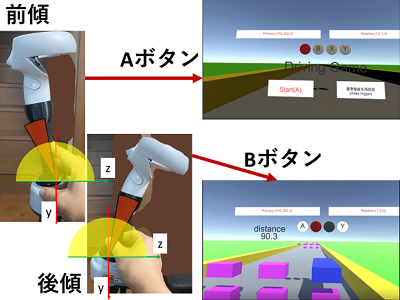

Some physical disability persons have difficulty in inputting buttons on the controller and can't enjoy games such as VR. I developed a joystick-type input system for new input system that is easy to operate for physical disability persons. I used an existing VR controller and a joystick of an electric wheelchair connected by a joint as the input device.Users can perform the operation corresponding to the button press by simply tilting this device. Using this system, I made a game to avoid obstacles by making full use of jumps and bombards.This system determines which posture the input device is in from the four directions of front, back, left, and right. This system assigns four button inputs of A, B, Y, and X to these postures.Using these four inputs, users operate contents assuming VR. To determine the posture, I use the rotation angle that can be obtained for each frame from the existing VR controller. With the proposal of this system, the range of contents that can be enjoyed by physical disability persons may expand regardless of VR. In addition, if you get on an electric wheelchair, even healthy persons can use this system, so contents that can be enjoyed by people with physical disability persons and healthy persons in the same way can be realized by using this system.