| Effect of Fake Heart Sounds on Anxiety While Experiencing High-Altitude VR Content |

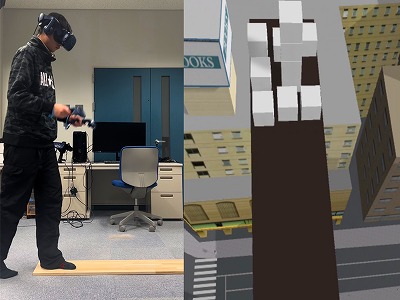

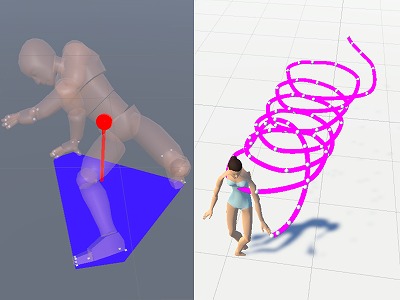

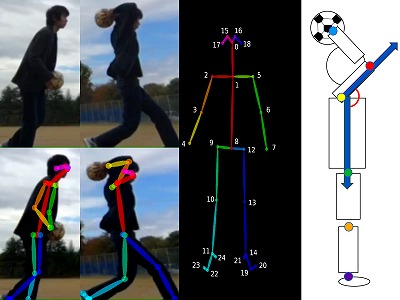

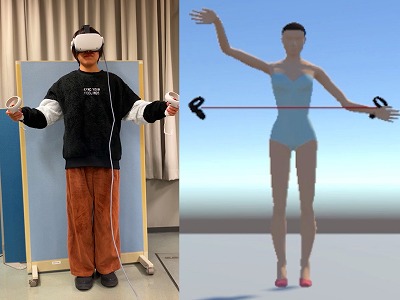

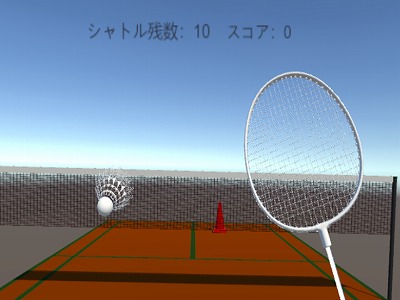

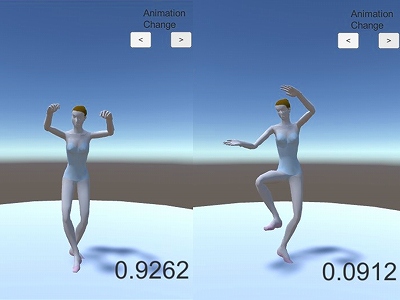

In recent years, VR technology has attracted attention as a new method for treating acrophobia. In my research, I aimed to verify the effect of fake heart sounds on anxiety in the treatment of acrophobia, using the placebo effect that makes people feel as if their own heart is making sounds when they hear fake heart sounds. In addition to a high-altitude VR experience created using Unity, I also conducted an experiment in which I presented fake heart sounds that gradually became faster. The subjects were asked to perform the task of picking up a box by walking to the edge of a board protruding from a skyscraper in a virtual space while wearing a head-mounted display (HMD). The subjects were divided into two groups by different orders of performing tasks under two experimental conditions: presence and absence of false heart sounds. I evaluated how the anxiety state of the subjects changed using a questionnaire and pulse measurement. As a result of having six people undergo the experiment, it was found that after experiencing the heart- sound condition, fear and anxiety were higher than in the no-heart-sound condition, and an increase in pulse rate was also confirmed. The limited effect of the placebo was confirmed by both the questionnaire and pulse measurement evaluations.